It's impossible to escape commentary about artificial intelligence taking over jobs and the world these days. But while AI continues advancing rapidly, we aren't at the point of wholesale human replacement just yet.

However, AI does enable employees to significantly enhance productivity and output. Tools like ChatGPT, Jasper, and Claude.ai provide ways to optimize workflows that previously required manual effort.

This begs the question - can AI-generated content be easily detected? How does AI detection actually work under the hood?

The short answer is current technology still struggles to reliably identify AI writing, especially sophisticated outputs. Detection relies on pattern recognition, but language models are evolving more human-like capabilities that evade detection.

My name is Terry Williams and I have been using AI content at my SEO agency since chatGPT came out. Over the past year I've tried tons of AI tools and, and even more AI detection tools. The following article will go over how do AI detectors work and how it can be found and recognized by AI detectors. We will also review strategies I've used through prompting to generate 0% AI detected content.

Why Does Detecting AI Content Matter?

AI text is increasingly prevalent across sectors like journalism, marketing, academia, and even law. Some lawyers even scandalously tried leveraging AI writing in legal documents.

It's not necessarily that AI content itself is bad. But shouldn't we have the option to distinguish human vs. non-human authorship?

Some argue AI detection risks harming writers who get falsely flagged. But providing transparency seems reasonable when AI-generated text can propagate misinformation or get utilized under questionable pretences.

Understanding the limitations and inner workings of AI detection technology is important context. Let's explore the arms race going on between advancing generation and deception capabilities.

How Does AI Text Detection Work?

Given AI's ability to produce seemingly human-like writing, distinguishing artificial vs authentic text can be challenging. However, various tools and techniques aim to detect AI-generated content.

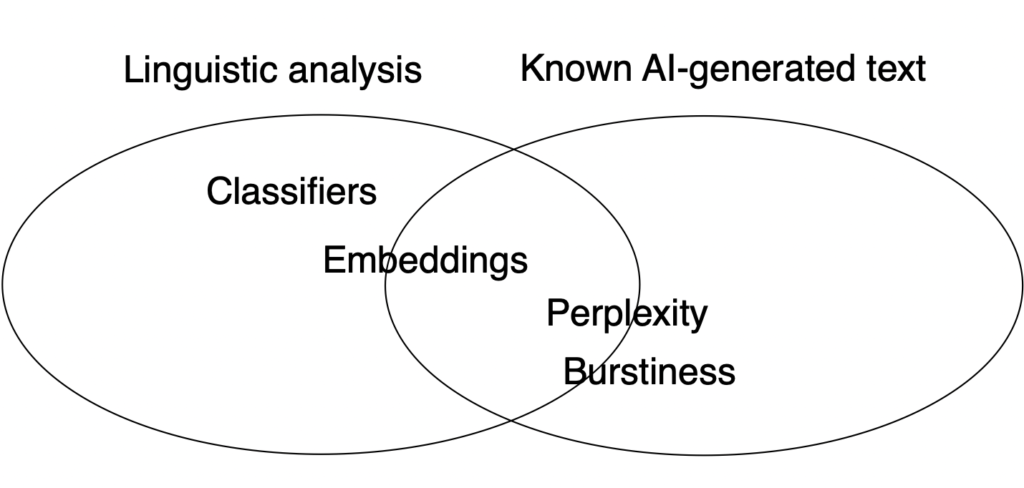

Broadly, AI detection relies on two core approaches:

Linguistic Analysis

This analyzes text for patterns indicative of AI like repetitive phrasing, lack of coherence, anomalous grammar, etc. Differences from natural language help identify computer-generated text.

Comparisons to Known Samples

Newly analyzed text gets compared against existing labeled corpora of both human and AI writing. Pattern similarities help classify the origin with certain statistical confidence.

Specific techniques under these umbrellas include:

- Bayesian classification models

- Neural networks and deep learning

- Stylometry for analyzing writing style

- Text vectorization for semantic clustering

In practice, detectors combine approaches for more robust predictions. But fundamental limitations remain around accurately identifying advanced outputs like ChatGPT designed explicitly to avoid detection through human mimicry.

The technology shows promise but isn't infallible. Let's explore some leading detection tools to analyze their real-world capabilities and shortcomings.

Techniques Used in AI Text Detection

Given the rapid evolution of AI-generated text, reliable detection requires an ensemble approach combining multiple advanced NLP techniques:

Classification Models

These train machine learning classifiers on human vs. AI samples to categorize new texts based on pattern recognition. Different models have varying accuracy tradeoffs.

Embeddings

Generating vector representations of words/sentences allows clustering semantically similar samples. New text gets compared against human/AI embedding distributions.

Perplexity

This measures how "surprised" or uncertain a model is when processing new text, indicating complexity. AI-generated text may have lower perplexity.

Burstiness

"Burstiness" analysis checks for unnatural spikes or variations in generated sentences that mimic human inconsistencies. AI outputs tend to lack realistic burstiness.

Grammar Inconsistencies

AI text often contains anomalous grammar like switching from first to third person erratically. Models learn these natural language quirks.

Content Errors

Does the text include obvious factual or logical errors? Hallmarks of AI's limited actual knowledge.

Detection systems combine these approaches for more robust results. But as large language models continue maturing, human-fooling outputs will only become more prevalent.

Rather than singular scores, modern detectors provide rich linguistic insights like perplexity and burstiness measurements on texts. This provides greater context instead of just binary AI/human judgements.

But significant challenges remain in keeping pace with AI's rapid evolution. Perfect detection may prove impossible as systems grow more sophistical and nuanced.

Using Classifiers for AI Text Detection

Classifiers are machine learning models commonly used in AI text detection to categorize writing as human or computer-generated based on patterns.

Classifiers analyze features like:

- Word choice

- Grammar

- Writing style

- Tone

By recognizing linguistic patterns prevalent in AI outputs, classifiers can sort new texts into "human" or "AI" categories.

Think of it like a apple sorting machine - the classifier is trained on different types of apples to learn their visual features. It then leverages this knowledge to automatically sort new apples it encounters.

For AI detection, classifiers must be trained on labeled datasets containing thousands of texts pre-identified as AI or human-written. Two main approaches are used:

Supervised Classifiers

These train on completely labeled data. For example, a dataset with pieces explicitly marked as "AI" or "Human". The model learns the inherent patterns within each category.

Unsupervised Classifiers

These train on unlabeled datasets, meaning the texts themselves don't have AI/human tags associated. The model must find natural groupings and commonalities within the texts from scratch.

Once trained on their respective datasets, both classifier types can categorize new writing based on patterns learned during training.

Combinations of supervised and unsupervised classifiers provide a robust ensemble approach. But significant volumes of training data are required for accurate AI detection capabilities.

Using Embeddings for AI Text Detection

In NLP, embeddings represent words or phrases as numerical vectors that encode semantic meaning. This allows clustering similar language elements based on meaning.

For AI detection, text can first be converted into embedding representations. Then a classifier model analyzes the resulting word and phrase vectors to categorize the text as human or artificially generated.

Embeddings capture key patterns indicative of AI writing:

Word Frequency Analysis

One method for detecting AI-generated text is analyzing word frequency. Text created by AI systems often contains unusually high repetition of certain words or phrases.

For example, an AI may repetitively use the same vocabulary when discussing a topic rather than employing synonyms and linguistic variety like humans. Phrases like "As stated previously" may also appear excessively, revealing the AI's algorithmic nature.

By scanning text to identify statistical anomalies in how often specific words or short phrases appear, machine learning models can flag potential AI content. However, this method can struggle with more sophisticated AI that better mimics realistic word diversity.

Advanced models like GPT-3 have massive vocabulary knowledge, making purely frequency-based analysis less reliable. But combining this technique with other linguistic approaches provides a broader perspective on a text's human-likeness.

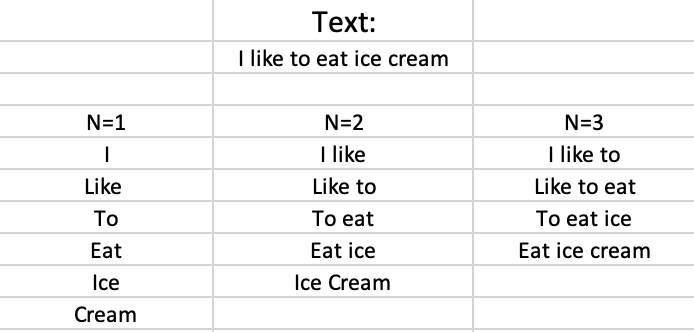

N-Gram Analysis

Looking at patterns in sequences of multiple words, known as n-grams, can also help identify hallmarks of artificial writing.

N-grams are sets of consecutive words together, like pairs (bigrams), triplets (trigrams), four-word groupings, etc. Analyzing frequencies of n-grams uncovers repetitive phrases and unnatural sentence structures that statistically deviate from human patterns.

For example, an AI text may overuse the same templated sentence openings like "According to research" or "Studies have shown that". The repetition becomes evident in bigram and trigram analysis.

By scanning for n-grams that appear unusually often, classifiers can spot odd rhythms and sequences in AI outputs. However, generative language models continue improving in reducing blatant repetition.

Advanced systems like GPT-3 produce remarkably human-like sentence variation. So n-gram analysis works best alongside other techniques, rather than a singular detection strategy.

Syntactic Analysis

Flaws in grammar, syntax, and overall sentence structure provide another signal for identifying AI writing. While modern generative AI has vastly improved linguistic skills, some weaknesses remain.

Syntactic analysis checks qualities like:

- Consistent verb tense, pronoun usage, and point of view. AI tends to erratically switch perspectives.

- Proper subject-verb agreement. AI sometimes mismatches singular/plural forms.

- Correct article usage for nouns. AI struggles with appropriate "a" vs "an" usage.

- Grammar eccentricities like run-on sentences. AI mimics human informality but often misses the mark.

- Overly complex or simply worded sentences. AI skews too high or low in readability.

By computationally evaluating these elements, classifiers pinpoint syntactic inconsistencies suggestive of AI authorship. Of course, human writers make grammar mistakes too, so this approach is most effective in combination with other analysis methods. But peculiarities in syntax remain a strong supplementary signal.

Semantic Analysis

Does the semantic meaning overall seem coherent or are there logical gaps? Lack of overarching coherence indicates AI text.

Converting text into vectorized embeddings enables leveraging machine learning approaches to identify AI patterns based on vector similarities and differences.

But generating perfectly human-like embeddings remains an active challenge. Sophisticated AI aimed at avoiding detection complicates things through its inherent language mastery.

Using Perplexity for AI Text Detection

Perplexity provides another metric to potentially identify AI-generated text. In NLP, perplexity measures how "surprised" or uncertain a language model is when encountering new text.

The intuition is that an AI system would find its own text less surprising compared to unique human writing. This lower "perplexity score" may indicate artificial generation.

For example:

Human Text: "The climate crisis demands urgent action to curb emissions and protect the planet."

AI Text: "Climate change poses big challenges. Emissions must be reduced to mitigate global warming."

The AI text contains more familiar phrasing that its own generative architecture has seen during training. This results in lower perplexity.

Meanwhile, the human text has more diverse vocabulary and sentence structure. This leads to higher complexity and perplexity for the AI model.

In practice, perplexity is used to benchmark the predictiveness of language models on test datasets. State-of-the-art models score very low perplexity on diverse corpora, indicating advanced generation capabilities.

So perplexity alone may struggle to identify sophisticated AI outputs designed to mimic human complexity. But it provides another useful statistical measure to incorporate into ensemble detection approaches.

Like all text metrics, perplexity scores represent probability rather than perfect binary judgement. The range of natural language complexity means perplexity should be one consideration among many when evaluating writing originality.

Using Burstiness Analysis for AI Detection

"Burstiness" refers to spikes or fluctuations in the statistical patterns of text. When writing, humans naturally display linguistic burstiness - uneven variation in things like sentence length, vocabulary diversity, and punctuation usage.

But AI-generated text may lack realistic human-like burstiness due to repeating templated patterns derived from training data. The unnatural uniformity can help reveal artificial origins.

For example, human writing has high burstiness in:

- Sentence length variation - we use short and long sentences. AI skews consistently.

- Word diversity - humans employ varied vocabulary. AI repeats common words.

- Grammatical irregularity - our grammar isn't perfectly consistent. AI patterns are steady.

- Punctuation and emojis - humans have uneven usage. AI is more formulaic.

By computationally analyzing the burstiness of various linguistic elements, classifiers can detect texts that seem artificially consistent or formulaic in their patterns.

However, state-of-the-art generative AI can display high linguistic variation matching human burstiness through massive training on diverse texts. So while this signals artificial writing, detection difficulty increases as AI gains experiential breadth.

In practice, burstiness analysis provides another useful statistical measure for spotting AI text generation rather than a singular smoking gun. Combining its probabilities with other linguistic analysis methods leads to the most robust results. But human-fooling AI necessitates tradeoffs.

Who Is Interested in Detecting AI Text?

While schools quickly come to mind as interested in identifying AI-generated text from students, many sectors have incentives to distinguish human vs. artificial writing:

Academic Researchers

Researchers developing NLP models and studying language itself need to benchmark AI outputs and advance detection techniques.

Businesses

Brands want to detect AI-fueled activities like fake reviews, spam, fraud, and impersonation to increase trust.

Law Enforcement

Authorities aim to identify AI text involved in crimes like identity theft, impersonation, and cyberbullying.

Social Networks

Platforms like Facebook and Twitter can detect bot accounts spreading misinformation using AI text detection.

News Media

Journalism organizations can fact check stories and sources by identifying AI disinformation campaigns.

Government Agencies

Governments want to eliminate AI-powered propaganda and election interference through text analysis.

General Public

Everyday readers want reassurance that information they consume online is authentic and produced by real humans.

Reliable AI detection provides value across many sectors by acting as a guardrail against deception. It also enables researchers to benchmark systems and study language itself through comparative analysis.

But ethical concerns around false positives and over-reliance on imperfect technology remain. Nuanced human judgement is still crucial, using AI signals as part of a balanced determination.

Does Google Detect AI Content? Implications for SEO

While Google does penalize "automatically-generated spammy content", they have clarified that AI-written text alone does not violate guidelines. The content quality itself determines penalties.

So ChatGPT and tools like claude.ai pose no inherent ranking risks. Google states their systems are not explicitly scanning for AI text itself currently.

However, low-quality outputs like repetitive or purely template-based AI content may get flagged as spammy. The solution is ensuring thoughtful human oversight.

In my experience, the best approach for SEO is collaborating with AI tools to produce high-quality, unique content. Combining AI generation with human refinement results in optimized pages.

The human touch enhances relevancy, original perspectives, and nuance. This results in content that appears more "authentically human".

As a bonus, the hybrid approach means pages are less likely to trigger plagiarism detectors or be flagged as AI-generated. This provides peace of mind.

But for SEO, the focus should remain on satisfying searcher needs and Google's quality guidelines versus solely masking AI origins. Search engines ultimately care about utility over source.

Therefore, responsible AI content creation that puts quality first poses no inherent SEO risk despite questionable detectors. Google rewards usefulness, not just human-sounding text. Blending AI with strategic human oversight unlocks content possibilities.

Top Tools for Detecting AI-Written Content

While human discernment remains important, software can help identify text statistically likely to be AI-generated:

Originality

Originality offers robust AI detection and plagiarism checks tailored for marketing content and copywriting. It provides granular percentage scores on AI likelihood for selections of text.

Copyleaks

Copyleaks specializes in academic plagiarism detection, but also includes AI text recognition capabilities. It's easy to use and offers free limited scans.

Grammarly

The popular writing assistant Grammarly recently added basic AI detection to its grammar/style checks for subscribers.

ProWritingAid

ProWritingAid combines grammar/style editing with a new AI tool to flag potential AI content. Accuracy seems questionable but provides a general sense.

ZeroGPT

ZeroGPT focuses specifically on detecting text created by systems like GPT-3 and ChatGPT. It highlights risky sentences and provides confidence scores.

Manual Review

Careful human analysis looking for patterns like repetition remains essential. AI detectors are imperfect supplements to human discernment.

For most use cases, tools like Originality and ZeroGPT that specialize in AI detection provide a helpful secondary perspective. But human judgement is still critical for balanced assessments, not over-relying on imperfect tech.

The ideal approach combines prudent qualitative evaluation with detectors as a pointer for areas needing closer examination. AI judgement continues advancing but still has limitations.

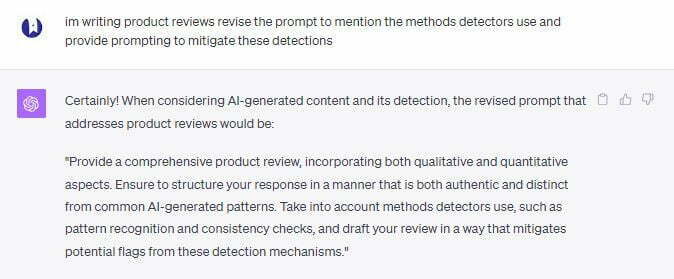

Prompting That Can Beat Current AI Detection tools

What better way to figure out what prompts to use to beat these detection tools, than to use AI itself to develop them.

Case Study

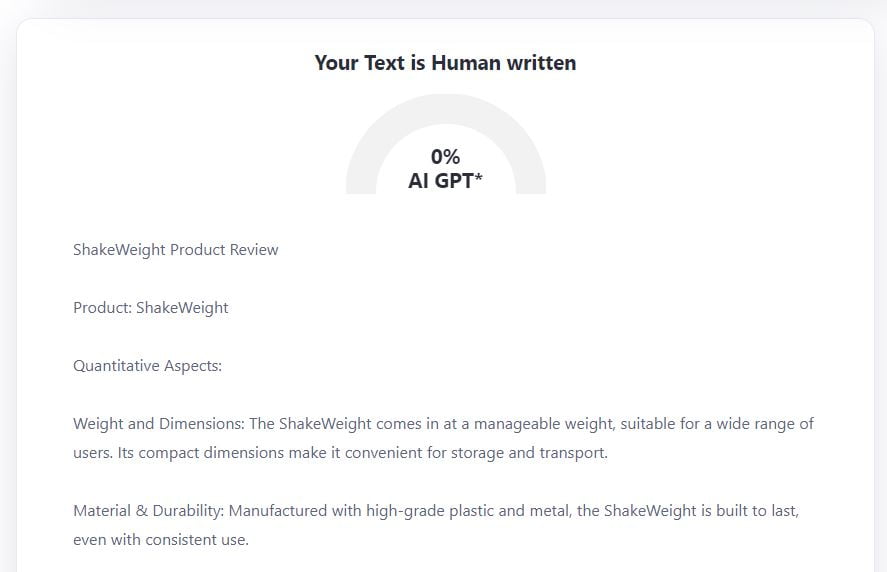

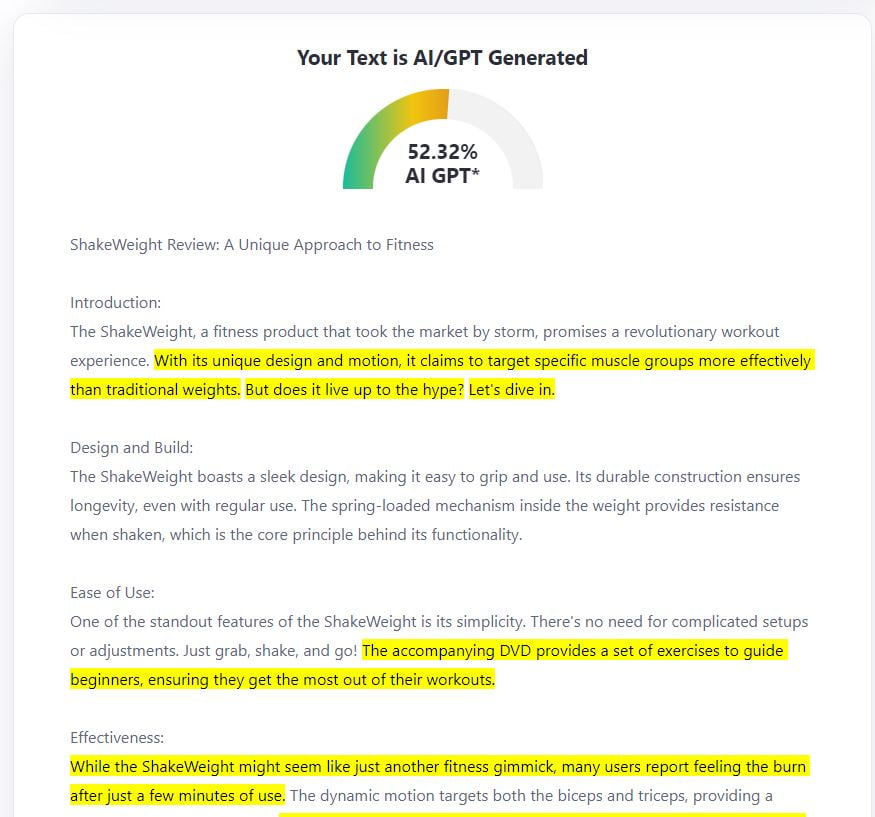

Using the prompt above I've created a review for the Shakeweight, lets see how the content stacks up on zeroGPT's detection tool.

Here are the results of the content produced from GPT4 with the prompt mentioned above.

The content is much longer than the image shows but for the sake of saving space, I've cropped it so mainly displays the score. As we can see with this prompt we've managed to fool the AI detection tool.

Now, lets try without the prompt:

So, without prompting to beat AI detectors our content from GPT4 comes back at 52% AI. WOW. This goes to show that AI detectors are still fallible with the proper prompting.

Ethical Considerations of AI Detection

While beneficial in many contexts, using AI detection tools also raises important ethical questions:

Writer Disempowerment

The advent of advanced generative writing tools provides new creative frontiers for writers to draw inspiration from AI capabilities. However, the spread of detection tools may discourage or even disempower authors from openly experimenting with these emerging resources.

Presumption of Guilt

The nature of synthetic media necessitates safeguards against misuse. However, presuming creative work as "guilty" of being AI-generated until proven otherwise invites risks of its own.

Marginalized creators may feel unfairly profiled if subjected to intense scrutiny under a "guilty until proven innocent" lens. Harms emerge if this deters participation or amplifies existing inequities in publication access.

Over-reliance

AI detectors provide helpful statistical clues about creative origins but remain imperfect and rebuttable. Placing absolute faith in their judgements contradicts the nuances of language itself.

While assisted by technology, human discernment ultimately safeguards us from the excesses of automation and probabilistic determinism. When in doubt, our personal sense of quality, coherence and meaning must trump cold metrics.

False Accusations

Even used responsibly, AI detection will inevitably result in some false accusations of synthetic content creation due to the imperfect nature of the technology.

The associated stigma and assumed dishonesty of these incorrect judgements inflict wholly undeserved harms upon writers whose creativity is unfairly questioned.

Transparency

If writers face risks from probabilistic AI detection, they deserve full transparency into the technological processes and data generating these verdicts.

With open inspection into how their writing is analyzed and flagged, creators can audit for technical defects, biases, and mischaracterizations when challenging unfair determinations.

Accountability

Who is ultimately accountable for harms caused by incorrect or overzealous AI detections? More accountability is needed.

To wield these emerging tools ethically, we must support authentic creation while mitigating punitive over-reach and false positives. Fostering responsible use requires ensuring due process, transparency, oversight, and human discretion.

Like any fallible technology applied to social contexts, we must question how AI detectors could concentrate power, silence voices, and introduce new systemic biases if deployed irresponsibly. Our shared humanity must guide our AI.

The Future Trajectory of AI Writing and Detection

The advent of ChatGPT kicked off a turbulent new era of synthetic media. While current AI detection falters on advanced outputs, the technology remains in its infancy.

As generative writing rapidly evolves more nuanced skills, researchers race to counteract deception risks. But perfectly identifying human-fooling AI may ultimately prove mathematically impossible.

OpenAI scientists have proposed stealthily watermarking AI content with invisible signals to indicate its origin. However, the feasibility and ethics of this approach are debatable.

Beyond text, AI can already generate deceptive imagery, audio, and video complicating matters further. And the stakes extend beyond mere detection - synthetic media can automate mass disinformation and fraud.

Harnessing AI safely will require a balanced combination of technical progress and prudent policymaking. Rather than outright bans, a measured approach enabling innovation while mitigating harms is needed.

Inclusive public consultation will be critical as more of these thorny ethical dilemmas emerge touching health, safety, privacy, and human rights. Maintaining public trust and oversight remains imperative.

The genie is out of the bottle when it comes to rapid generative AI advancements. But society retains some agency in steering this volatile, unpredictable trajectory toward equitable ends that counteract deception and augment human knowledge.

With ethical guidance, advanced synthetic media could profoundly enhance sectors like medicine, education, and science over time. But we must boldly confront the existential challenges and opportunities ahead rather than reactively drift into dystopia. Our collective wisdom must steer our collective technological might.

FAQ

How accurate are current AI detection tools?

Most tools boast 95%+ accuracy, but real-world results vary greatly based on factors like text source and length. Accuracy rates should be scrutinized.

What is synthetic media?

Synthetic media refers to artificial imagery, audio, video, and text generated by AI. It has many beneficial uses but risks include misinformation.

Do detectors work better on some AI systems than others?

Yes, detectors tend to be more accurate on older AI like GPT-2 and struggle more with sophisticated models like GPT-3 and ChatGPT designed to avoid detection.

Can generators be trained to purposefully trick detectors?

Absolutely. AI can be explicitly fine-tuned to fool detectors through adversarial techniques and learned deception. This cat-and-mouse game is ongoing.

How can I manually spot AI-generated text?

Look for subtle repetitive patterns, sterile or formulaic tone, inconsistencies, factual errors, and logical gaps indicating a lack of human insight.

What regulation exists around synthetic media?

Limited regulation exists currently, but governments are accelerating policymaking. Main goals are ensuring attribution and minimizing harms.

Will perfect detection ever be possible?

Given AI's rapid evolution, perfectly distinguishing advanced synthetic media may become mathematically impossible, forcing tradeoffs.

How can I responsibly leverage generative AI?

Using transparently, focusing on creativity over deception, emphasizing quality, attributing source, and allowing human guidance.